V100 SXM Review: The Ultimate High-Performance Computing Solution

Lisa

published at Jul 11, 2024

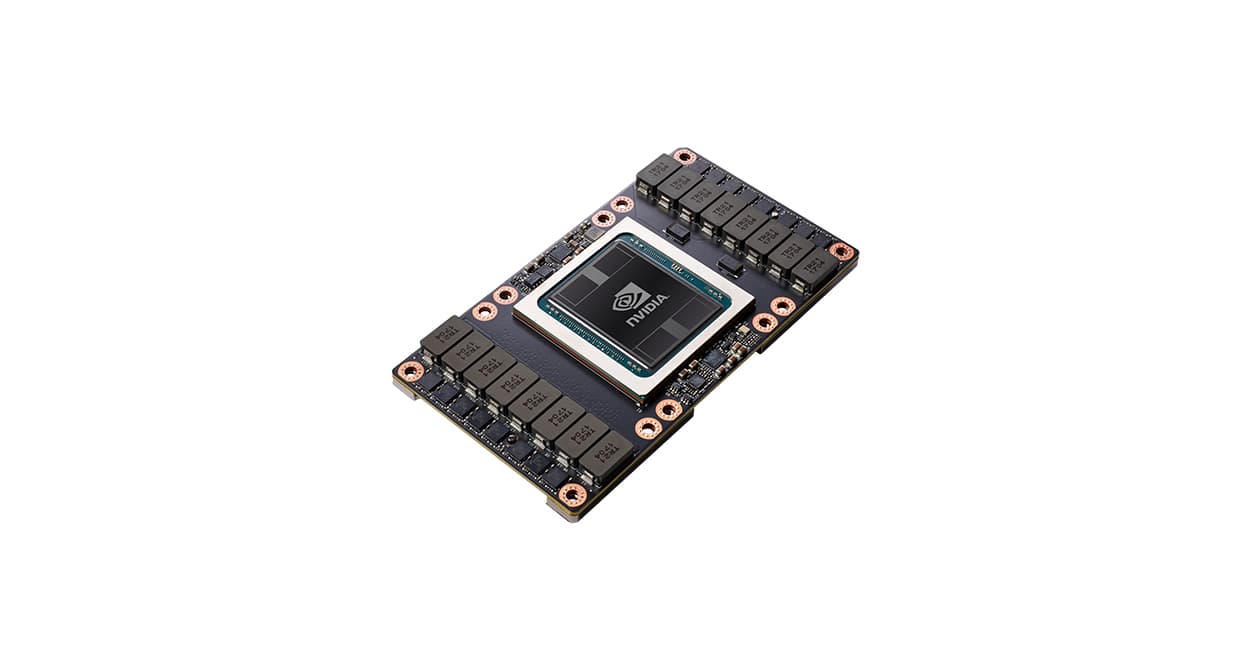

V100 SXM GPU Graphics Card Review: Introduction and Specifications

Introduction

Welcome to our in-depth review of the V100 SXM GPU Graphics Card, a powerhouse specifically designed for AI practitioners and machine learning enthusiasts. As the demand for high-performance GPUs to train, deploy, and serve ML models continues to grow, the V100 SXM emerges as a top contender. This next-gen GPU is not only a benchmark GPU for AI but also offers unparalleled performance for large model training. Let's dive into the specifications and understand why this GPU is a game-changer for the AI community.

Specifications

The V100 SXM GPU is engineered to meet the rigorous demands of AI and machine learning applications. Here are the key specifications:

- GPU Architecture: NVIDIA Volta

- Tensor Cores: 640

- CUDA Cores: 5120

- Memory: 16 GB HBM2

- Memory Bandwidth: 900 GB/s

- FP32 Performance: 15.7 TFLOPS

- FP64 Performance: 7.8 TFLOPS

- Tensor Performance: 125 TFLOPS

- Interconnect: NVLink

Why Choose V100 SXM for AI and Machine Learning?

The V100 SXM stands out as the best GPU for AI and machine learning due to its robust architecture and performance capabilities. With 640 Tensor Cores and 5120 CUDA Cores, this GPU can handle complex computations required for large model training and inference. The 16 GB HBM2 memory ensures that massive datasets can be processed efficiently, making it an ideal choice for AI practitioners who need to access powerful GPUs on demand.

Cloud Integration and Pricing

For those looking to leverage cloud services, the V100 SXM is readily available in various cloud environments. This allows AI builders to access GPUs on demand, thereby optimizing their workflows without the need for substantial upfront investment. When comparing cloud GPU prices, the V100 SXM offers a competitive edge, especially when juxtaposed with the H100 price and H100 cluster configurations. The GB200 cluster and GB200 price also make it an attractive option for scalable AI solutions.

Performance Benchmarks

In benchmark tests, the V100 SXM consistently outperforms its predecessors and competitors, solidifying its position as a top-tier GPU for AI and machine learning. Whether you're looking to train, deploy, or serve ML models, this GPU delivers exceptional performance, making it a valuable asset for any AI project.

In summary, the V100 SXM GPU Graphics Card is a formidable tool for AI practitioners. Its impressive specifications, cloud integration options, and competitive pricing make it the best GPU for AI and machine learning tasks. Whether you're an individual AI builder or part of a large enterprise, the V100 SXM offers the performance and flexibility you need to succeed in your AI endeavors.

V100 SXM AI Performance and Usages

Why is the V100 SXM Considered the Best GPU for AI?

The V100 SXM is heralded as the best GPU for AI due to its exceptional performance in handling large model training and its ability to efficiently train, deploy, and serve ML models. This GPU is specifically designed to meet the rigorous demands of AI practitioners, offering unparalleled computational power and flexibility.

Exceptional Computational Power

The V100 SXM boasts an impressive number of CUDA cores and Tensor cores, making it a powerhouse for AI tasks. This next-gen GPU can handle the most demanding workloads, allowing AI builders to achieve faster training times and more accurate model predictions. Compared to other GPUs, the V100 SXM stands out in benchmark GPU tests, consistently delivering superior performance.

Flexibility for AI Practitioners

One of the standout features of the V100 SXM is its ability to be accessed on demand in the cloud. This flexibility is crucial for AI practitioners who need powerful GPUs on demand without the upfront investment. The cloud GPU price for V100 SXM is competitive, making it an attractive option for both startups and established enterprises. With cloud on demand services, users can scale their GPU resources as needed, optimizing costs and performance.

V100 SXM in Cloud for AI Practitioners

Scalable Cloud Solutions

The V100 SXM is a popular choice in cloud environments due to its scalability. AI practitioners can leverage cloud solutions to access powerful GPUs on demand, facilitating large model training and deployment. The cloud price for V100 SXM is often more economical compared to purchasing physical hardware, and it offers the added benefit of scalability. Users can easily adjust their GPU resources based on project requirements, ensuring they only pay for what they need.

Comparison with H100 and GB200 Clusters

When comparing the V100 SXM to newer models like the H100, it's essential to consider the specific needs of your AI projects. While the H100 price and H100 cluster offer advanced features, the V100 SXM remains a cost-effective and powerful option for many applications. Similarly, the GB200 cluster, with its GB200 price, provides another alternative, but the V100 SXM's proven track record and cloud availability make it a reliable choice for many AI builders.

Usages in Machine Learning and AI Development

Training and Deploying ML Models

The V100 SXM excels in training and deploying machine learning models. Its high memory bandwidth and Tensor cores enable rapid processing of large datasets, which is crucial for developing accurate and efficient ML models. This GPU is particularly beneficial for tasks that require extensive computational power, such as natural language processing, image recognition, and predictive analytics.

Serving ML Models in Production

Once models are trained, the V100 SXM also shines in serving ML models in production environments. Its robust architecture ensures low latency and high throughput, making it ideal for real-time applications. Whether you're deploying models in the cloud or on-premises, the V100 SXM offers the reliability and performance needed to maintain seamless operations.

Conclusion

The V100 SXM stands out as a top-tier GPU for AI and machine learning applications. Its combination of computational power, flexibility, and cost-effectiveness makes it a preferred choice for AI practitioners looking to train, deploy, and serve ML models efficiently. With the added advantage of cloud on demand availability, the V100 SXM continues to be a benchmark GPU in the AI industry.

V100 SXM Cloud Integrations and On-Demand GPU Access

What Are the Cloud Integration Capabilities of the V100 SXM?

The V100 SXM GPU is highly optimized for cloud environments, making it an excellent choice for AI practitioners looking to leverage powerful GPUs on demand. Its integration capabilities allow users to train, deploy, and serve ML models efficiently. With cloud platforms offering V100 SXM instances, accessing this next-gen GPU for AI tasks has never been easier.

How Does On-Demand GPU Access Work?

On-demand GPU access allows users to rent V100 SXM GPUs through cloud providers without the need for large upfront investments. This means you can utilize the best GPU for AI when you need it, and only pay for the time you use. This model is particularly beneficial for large model training and other compute-intensive tasks.

Benefits of On-Demand GPU Access

- Cost Efficiency: Avoid the high upfront costs associated with purchasing a V100 SXM GPU. Pay only for what you use, making it easier to manage budgets and scale operations.

- Scalability: Easily scale your GPU resources up or down depending on your project requirements. This is particularly useful for AI builders who need varying levels of computational power.

- Flexibility: Access powerful GPUs on demand, enabling you to switch between different GPU models like the V100 SXM, H100, or GB200 clusters based on your needs.

Pricing for V100 SXM in the Cloud

Cloud GPU pricing for the V100 SXM varies depending on the cloud provider and the specific configuration. On average, the cost can range from $2 to $5 per hour. This pricing model is highly competitive compared to the H100 price and other next-gen GPUs, making the V100 SXM a cost-effective choice for AI and machine learning applications.

Comparing Cloud Prices

When comparing cloud prices, it's essential to consider the specific needs of your project. While the V100 SXM offers excellent performance for AI tasks, other GPUs like the H100 or GB200 might be more suitable for different workloads. For instance, the H100 cluster might offer better performance for certain types of large model training, but it often comes at a higher cost.

Why Choose V100 SXM for Cloud AI Practitioners?

The V100 SXM is widely regarded as one of the best GPUs for AI and machine learning. Its robust architecture and high memory bandwidth make it ideal for complex computations and large model training. Additionally, its availability in cloud environments means you can access this benchmark GPU without the need for significant capital expenditure.

Overall, the V100 SXM offers a balanced mix of performance, cost-efficiency, and scalability, making it a top choice for AI practitioners looking to leverage cloud on-demand GPU resources.

V100 SXM Pricing for Different Models

When considering the V100 SXM GPU for your AI and machine learning needs, pricing is a crucial factor. This section will address the cost differences among various models of the V100 SXM and provide insights into their value propositions, especially for AI practitioners and those involved in large model training.

V100 SXM 16GB vs. 32GB Models

The V100 SXM GPU comes in two primary memory configurations: 16GB and 32GB. The 16GB model typically costs less, making it a more budget-friendly option for those who need access to powerful GPUs on demand but do not require the highest memory capacity. On the other hand, the 32GB model is priced higher but offers more substantial memory, which is beneficial for large model training and deploying more complex ML models.

Cloud GPU Pricing

For those who prefer not to invest in physical hardware, cloud GPU pricing is another consideration. The V100 SXM GPUs are available through various cloud service providers, offering flexibility and scalability. Pricing for cloud on demand services can vary significantly based on the provider, the duration of use, and the specific configurations selected. For instance, the cloud price for a V100 SXM 32GB instance may be higher than that of a 16GB instance, but it provides better performance for training and deploying large models.

Comparing V100 SXM to Next-Gen GPUs like H100

When comparing the V100 SXM to next-gen GPUs such as the H100, it's essential to consider both performance and price. The H100 offers advanced features and potentially better performance metrics, but it also comes at a higher cost. For instance, the H100 price is generally higher than the V100 SXM, making the latter a more economical choice for many AI practitioners and machine learning engineers.

Cluster Pricing: GB200 and H100 Clusters

For organizations requiring more extensive computational power, cluster solutions like the GB200 and H100 clusters are available. The GB200 cluster, which may include multiple V100 SXM GPUs, offers a balance between cost and performance. The GB200 price is generally more affordable compared to an H100 cluster, making it a viable option for those looking to build a robust GPU for AI infrastructure without breaking the bank.

Special Offers and Discounts

It's also worth noting that various GPU offers and discounts may be available from time to time. These can significantly impact the overall cost, making high-performance GPUs like the V100 SXM more accessible to a broader range of users. Keeping an eye out for such offers can result in substantial savings, especially for those looking to train, deploy, and serve ML models efficiently.

Conclusion

In summary, the pricing of the V100 SXM GPU varies based on model specifications, cloud service configurations, and the availability of special offers. While the 16GB model provides a more cost-effective solution, the 32GB model offers enhanced capabilities for more demanding tasks. When considering cloud GPU pricing and cluster solutions, it's essential to weigh the cost against the performance benefits to determine the best GPU for AI and machine learning applications.

V100 SXM Benchmark Performance

Why Benchmark Performance Matters for AI and Machine Learning

Benchmark performance is crucial for AI practitioners and machine learning enthusiasts who rely on powerful GPUs to train, deploy, and serve ML models efficiently. The V100 SXM GPU graphics card stands out as one of the best GPUs for AI, offering exceptional performance metrics that cater to the needs of large model training and cloud-based AI solutions.

V100 SXM Benchmark Performance Metrics

Compute Performance

The V100 SXM demonstrates impressive compute performance, making it a top choice for AI builders and machine learning professionals. With its 640 Tensor Cores and 125 TFLOPS of deep learning performance, this next-gen GPU excels in handling complex calculations required for AI tasks. Whether you're training large models or deploying them, the V100 SXM ensures that you can access powerful GPUs on demand, optimizing your workflow.

Memory Bandwidth

One of the standout features of the V100 SXM is its high memory bandwidth. With 900 GB/s of bandwidth, this GPU can handle large datasets and intricate models without bottlenecks. This is particularly beneficial for cloud GPU solutions where data transfer speeds can significantly impact performance. For AI practitioners looking to leverage cloud on demand services, the V100 SXM offers a compelling option with its robust memory capabilities.

Energy Efficiency

Energy efficiency is another critical factor in benchmark performance, especially when considering the cloud price and operational costs. The V100 SXM is designed to deliver high performance without excessive power consumption, making it a cost-effective solution for both individual users and large-scale AI clusters like the H100 cluster or GB200 cluster. This efficiency translates to lower cloud GPU prices and more competitive GPU offers for AI and machine learning projects.

Comparative Analysis with Other GPUs

V100 SXM vs. H100

When comparing the V100 SXM to the newer H100, it's essential to consider both performance and cost. While the H100 offers enhanced features and higher performance metrics, the V100 SXM remains a strong contender due to its proven reliability and more accessible cloud GPU prices. For those looking to balance performance with budget, the V100 SXM provides an excellent alternative to the more expensive H100 price point.

V100 SXM in Cloud Environments

The V100 SXM excels in cloud environments, offering scalable solutions for AI practitioners who need GPUs on demand. Its robust performance metrics ensure that you can train, deploy, and serve ML models efficiently, making it one of the best GPUs for AI in cloud settings. The competitive cloud price and availability of various GPU offers make the V100 SXM an attractive option for those looking to leverage cloud-based AI solutions.

Real-World Applications and Use Cases

Large Model Training

The V100 SXM's high compute performance and memory bandwidth make it ideal for large model training. AI practitioners can train complex models faster and more efficiently, reducing the time to deployment and improving overall productivity.

Cloud for AI Practitioners

For those utilizing cloud services, the V100 SXM offers a reliable and cost-effective solution. Its energy efficiency and competitive cloud GPU prices make it an excellent choice for cloud-based AI projects, allowing users to access powerful GPUs on demand without breaking the bank.

AI and Machine Learning Deployment

Deploying AI and machine learning models is seamless with the V100 SXM. Its robust performance ensures that models can be served quickly and accurately, meeting the demands of real-time applications and large-scale deployments.

Frequently Asked Questions about the V100 SXM GPU Graphics Card

What makes the V100 SXM the best GPU for AI and machine learning?

The V100 SXM is considered the best GPU for AI and machine learning due to its exceptional performance and efficiency in handling large model training and deployment. It offers 640 Tensor Cores, which significantly accelerate AI computations, making it ideal for training, deploying, and serving ML models. Its high memory bandwidth and deep learning capabilities make it a preferred choice for AI practitioners looking to access powerful GPUs on demand.

How does the V100 SXM compare to the H100 in terms of performance and price?

While the H100 is a next-gen GPU with advanced features, the V100 SXM remains a strong contender due to its proven track record and cost-effectiveness. The H100 price is generally higher, reflecting its newer technology and enhanced capabilities. However, for many AI builders, the V100 SXM offers a balanced mix of performance and value, making it a popular choice in cloud GPU offerings and on-premises deployments.

Can the V100 SXM be used in a cloud environment for AI practitioners?

Absolutely. The V100 SXM is widely available in cloud environments, allowing AI practitioners to access powerful GPUs on demand. Many cloud providers offer the V100 SXM as part of their GPU offerings, enabling users to scale their AI and machine learning workloads efficiently without the need for significant upfront investment in hardware. This flexibility is crucial for those needing to train and deploy large models quickly and cost-effectively.

Is the V100 SXM suitable for large model training?

Yes, the V100 SXM is highly suitable for large model training. Its architecture is designed to handle extensive computational tasks, making it ideal for training large-scale neural networks. The GPU's high memory capacity and bandwidth ensure that it can manage the substantial data throughput required for large model training, providing faster and more efficient results.

What are the advantages of using the V100 SXM in a GB200 cluster?

Using the V100 SXM in a GB200 cluster can significantly enhance performance for AI and machine learning tasks. The clustering capability allows multiple V100 SXM GPUs to work together, providing a substantial boost in computational power and efficiency. This setup is particularly beneficial for enterprises looking to deploy and serve ML models at scale. Additionally, the GB200 price is often more competitive when leveraging the V100 SXM, providing a cost-effective solution for high-performance computing needs.

How does the cloud GPU price for the V100 SXM compare to other GPUs?

The cloud GPU price for the V100 SXM is competitive, especially when considering its performance capabilities. While newer GPUs like the H100 may have a higher cloud price due to their advanced features, the V100 SXM provides a robust and reliable option for AI practitioners. Many cloud providers offer flexible pricing plans for the V100 SXM, making it accessible for a wide range of budgets and project requirements.

Why should AI builders consider the V100 SXM for their projects?

AI builders should consider the V100 SXM for its proven performance, scalability, and cost-effectiveness. Its ability to handle complex AI and machine learning tasks, combined with its availability in cloud environments, makes it a versatile and powerful tool. Whether you are training, deploying, or serving ML models, the V100 SXM provides the necessary computational power to achieve optimal results. Additionally, its competitive pricing and widespread support in cloud services make it an attractive option for AI builders looking to maximize their resources.

Final Verdict on V100 SXM GPU Graphics Card

The V100 SXM GPU Graphics Card stands out as a powerful and efficient tool for AI practitioners looking to train, deploy, and serve machine learning models at scale. Its exceptional performance in large model training and cloud-based AI applications makes it a top contender in the market. While the V100 SXM excels in many areas, it is important to consider both its strengths and areas where it could improve. By evaluating these aspects, AI builders and organizations can make informed decisions about leveraging this next-gen GPU for their specific needs. Below, we break down the strengths and areas for improvement of the V100 SXM GPU.

Strengths

- Exceptional performance in large model training and inference tasks.

- Highly efficient for AI practitioners seeking to access powerful GPUs on demand.

- Strong support for cloud-based AI applications, making it ideal for deploying and serving ML models.

- Robust architecture that ensures reliability and stability during intensive computational tasks.

- Seamless integration with cloud GPU services, enabling cost-effective scaling and flexibility.

Areas of Improvement

- Cloud GPU price can be relatively high, especially when compared to newer models like the H100.

- Limited availability in certain regions, which can affect access to GPUs on demand.

- Power consumption is higher compared to some of the latest GPUs, impacting operational costs.

- Upfront investment might be substantial for small to mid-sized AI builders.

- Support for newer software frameworks and updates may lag behind newer GPU releases.