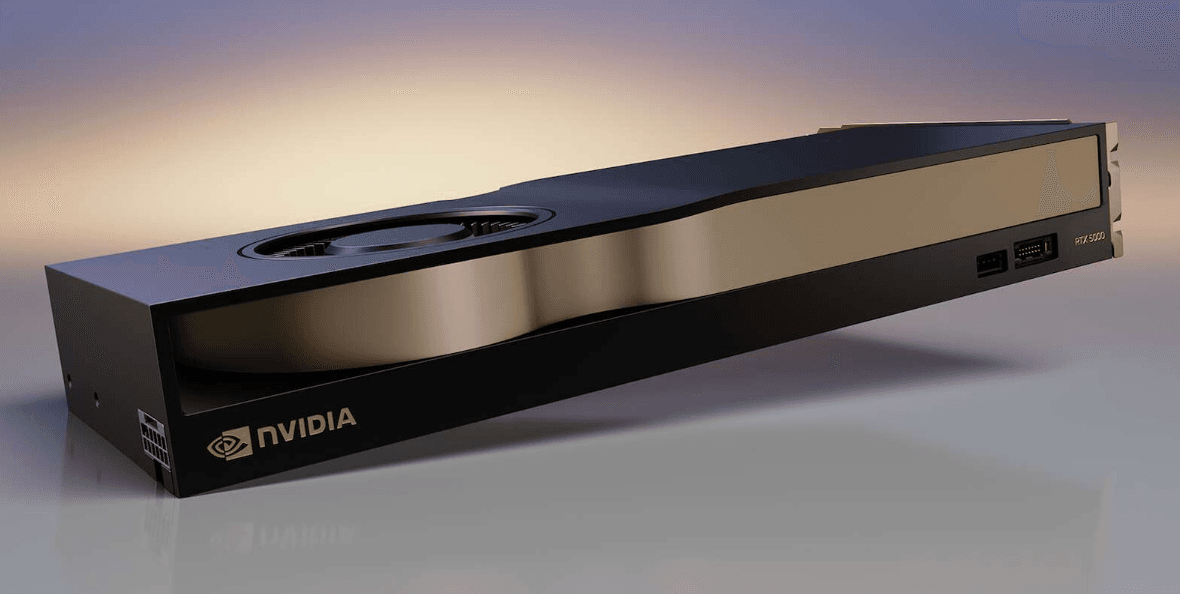

RTX 5000 (24 GB) Review: Unleashing Unmatched Performance And Capabilities

Lisa

published at Jul 11, 2024

RTX 5000 (24 GB) GPU Graphics Card Review: Introduction and Specifications

Introduction

Welcome to our in-depth review of the RTX 5000 (24 GB) GPU Graphics Card. As one of the leading next-gen GPUs in the market, the RTX 5000 is tailored for AI practitioners, data scientists, and machine learning enthusiasts who require powerful hardware to train, deploy, and serve ML models efficiently. Whether you're looking to access powerful GPUs on demand or seeking the best GPU for AI applications, the RTX 5000 stands out with its impressive performance and advanced features.

Specifications

The RTX 5000 (24 GB) is designed to handle the most demanding AI and machine learning tasks. Below, we delve into the key specifications that make this GPU a top contender in the market:

- Memory: 24 GB GDDR6

- Cuda Cores: 3072

- Tensor Cores: 384, optimized for AI and machine learning tasks

- RT Cores: 48, enhancing real-time ray tracing capabilities

- Memory Bandwidth: 448 GB/s, ensuring smooth data transfer rates

- Power Consumption: 250W TDP, balancing performance and efficiency

- Interface: PCI Express 4.0, offering high-speed connectivity

Performance for AI and Machine Learning

When it comes to large model training and deployment, the RTX 5000 (24 GB) excels. The GPU's 24 GB memory capacity allows for handling extensive datasets and complex models, making it the best GPU for AI and machine learning tasks. With 384 Tensor Cores, the RTX 5000 significantly accelerates AI computations, reducing training times and improving overall efficiency. This capability is particularly beneficial for AI builders and practitioners who need to develop and deploy models quickly.

Cloud Integration and On-Demand Access

For those who prefer cloud-based solutions, the RTX 5000 (24 GB) is available in various cloud GPU offerings. This allows AI practitioners to access powerful GPUs on demand without the need for substantial upfront investment. Comparing cloud GPU prices, the RTX 5000 offers a cost-effective solution for those looking to balance performance with budget constraints. Additionally, the GPU is compatible with GB200 clusters, providing scalable solutions for large-scale AI projects.

Comparison with Other GPUs

In the realm of AI and machine learning, the RTX 5000 (24 GB) competes closely with other high-end GPUs like the H100. While the H100 cluster might offer higher performance, the cloud price and GB200 price of the RTX 5000 make it a more accessible option for many users. For those considering cloud on demand services, the RTX 5000 provides a balanced mix of performance, cost, and accessibility, making it a popular choice among AI practitioners.

Conclusion

In summary, the RTX 5000 (24 GB) GPU Graphics Card is a formidable choice for AI and machine learning applications. Its powerful specifications, coupled with the flexibility of cloud integration, make it an ideal option for both individual practitioners and large-scale AI projects. Whether you're looking to train, deploy, or serve ML models, the RTX 5000 offers the performance and reliability needed to excel in today's competitive landscape.

RTX 5000 (24 GB) AI Performance and Usages

How does the RTX 5000 (24 GB) fare in AI performance?

The RTX 5000 (24 GB) GPU is a next-gen GPU that excels in AI performance, making it one of the best GPUs for AI tasks. With a substantial 24 GB of VRAM, it is designed to handle large model training efficiently, ensuring that AI practitioners can train, deploy, and serve ML models with ease. This GPU is particularly beneficial for those who leverage cloud for AI practitioners, as it provides robust computational power on demand.

Why is the RTX 5000 (24 GB) considered the best GPU for AI?

The RTX 5000 (24 GB) stands out as the best GPU for AI due to its exceptional processing capabilities and large memory size. This combination allows for seamless large model training and real-time data processing, which are critical for AI and machine learning applications. Additionally, its architecture is optimized for AI workloads, providing superior performance compared to other GPUs in its class.

AI Model Training and Deployment

When it comes to training and deploying AI models, the RTX 5000 (24 GB) shines. Its powerful cores and high memory bandwidth ensure that large datasets can be processed quickly. This makes it an ideal choice for AI builders who need to run complex algorithms and neural networks efficiently. Moreover, the GPU's architecture supports a wide range of AI frameworks, making it versatile for various AI applications.

Cloud Integration and On-Demand Availability

One of the significant advantages of the RTX 5000 (24 GB) is its seamless integration with cloud platforms. AI practitioners can access powerful GPUs on demand, allowing them to scale their operations without the need for significant upfront investment. This is particularly beneficial when considering cloud GPU price and the flexibility it offers. For instance, the cloud price for accessing an RTX 5000 is often more cost-effective compared to setting up an in-house GPU cluster.

Comparative Analysis: RTX 5000 vs. H100

While the H100 cluster is known for its high performance, the RTX 5000 (24 GB) offers a more affordable alternative without compromising on quality. The H100 price can be prohibitive for smaller operations, making the RTX 5000 a more accessible option for many AI practitioners. Additionally, the RTX 5000 provides competitive performance metrics, making it a viable option for those who need a high-performance GPU for AI and machine learning tasks.

Benchmarking and Performance Metrics

In benchmark GPU tests, the RTX 5000 (24 GB) consistently performs at the top of its class. It is particularly effective in tasks that require high computational power and large memory capacity, such as training deep learning models and running complex simulations. These benchmarks highlight its suitability as a GPU for machine learning and AI applications.

Scalability and Flexibility

The RTX 5000 (24 GB) is not only powerful but also scalable. It can be integrated into larger GPU clusters like the GB200 cluster, providing even more computational power when needed. The GB200 price is competitive, making it an attractive option for those looking to expand their AI capabilities without breaking the bank.

Conclusion: The RTX 5000 (24 GB) as a Versatile AI Tool

For AI practitioners looking to leverage powerful GPUs on demand, the RTX 5000 (24 GB) offers a compelling blend of performance, scalability, and cost-effectiveness. Whether you're training large models, deploying AI solutions in the cloud, or simply need a reliable GPU for machine learning tasks, the RTX 5000 (24 GB) stands out as a top-tier choice in the market.

RTX 5000 (24 GB) Cloud Integrations and On-Demand GPU Access

Why Choose RTX 5000 (24 GB) for Cloud Integrations?

The RTX 5000 (24 GB) is a next-gen GPU that offers unparalleled performance for AI practitioners. Whether you're training large models, deploying machine learning (ML) models, or serving them in production, the RTX 5000 is designed to meet your needs. With 24 GB of VRAM, it can handle complex computations and large datasets efficiently.

Benefits of Accessing Powerful GPUs On-Demand

One of the standout features of the RTX 5000 (24 GB) is its seamless integration with cloud platforms. This allows you to access powerful GPUs on demand, making it easier to scale your operations based on your requirements. Here are some key benefits:

Scalability

With cloud GPU access, you can easily scale your resources up or down. This is particularly beneficial for AI practitioners who need to train large models or run complex simulations.

Cost-Effectiveness

Cloud GPU pricing is flexible, allowing you to pay only for what you use. This is a significant advantage over traditional setups where you need to invest in expensive hardware upfront. For example, the cloud price for accessing an RTX 5000 (24 GB) is generally lower compared to the H100 price or setting up a GB200 cluster.

Ease of Use

Integrating the RTX 5000 (24 GB) into your cloud infrastructure is straightforward. Many cloud providers offer pre-configured instances, so you can get started quickly without worrying about hardware compatibility or setup.

Cloud GPU Pricing and Availability

Cloud GPU pricing for the RTX 5000 (24 GB) varies depending on the provider and the specific configuration you choose. On average, you can expect to pay between $2 to $3 per hour for on-demand access. This is competitive compared to the H100 price, which can be significantly higher for similar performance levels.

Comparing Cloud GPU Offers

When comparing cloud GPU offers, it's essential to consider both the price and the performance. The RTX 5000 (24 GB) provides a balanced combination of cost and computational power, making it one of the best GPUs for AI and machine learning tasks. For instance, while the H100 cluster offers exceptional performance, the GB200 price and RTX 5000 cloud price offer a more budget-friendly alternative without compromising too much on capability.

Real-World Applications

With the RTX 5000 (24 GB), AI practitioners can train, deploy, and serve ML models more efficiently. This GPU is particularly well-suited for tasks such as large model training, real-time inference, and data analytics. Its robust performance makes it the best GPU for AI builders looking to leverage cloud on demand capabilities.

Benchmark GPU Performance

In benchmark GPU tests, the RTX 5000 (24 GB) consistently outperforms many of its competitors in the same price range. This makes it an excellent choice for those who need reliable and powerful GPUs on demand.

RTX 5000 (24 GB) Pricing: Different Models and Their Value

When it comes to selecting the best GPU for AI, particularly for large model training and deploying machine learning models, the RTX 5000 (24 GB) stands out as a top choice. However, the pricing of this next-gen GPU can vary significantly depending on the model and the provider. In this section, we will dive into the different pricing models available for the RTX 5000 (24 GB) and help you understand the value each offers.

Direct Purchase

For AI builders and enthusiasts looking to own their hardware, purchasing the RTX 5000 (24 GB) directly is a viable option. The price for a new RTX 5000 (24 GB) typically ranges from $2,000 to $3,000 depending on the vendor and any additional features or warranties included. While this upfront cost might seem steep, it provides long-term value for those who require consistent access to powerful GPUs for machine learning and AI training tasks.

Cloud GPU Pricing

For those who prefer flexibility and scalability, accessing GPUs on demand through cloud services is an attractive alternative. Cloud GPU prices for the RTX 5000 (24 GB) can vary based on the provider and the specific plan chosen. On average, cloud prices for using the RTX 5000 (24 GB) range from $1.50 to $3.00 per hour. This model allows AI practitioners to scale their resources according to their needs, making it an excellent option for large model training and serving ML models without the need for a significant upfront investment.

Comparing Cloud vs. Direct Purchase

When comparing the cloud GPU price to the direct purchase price, several factors come into play. Direct purchase offers the benefit of ownership and potentially lower long-term costs, especially for constant usage. On the other hand, cloud on demand provides flexibility, scalability, and the ability to access powerful GPUs when needed, without the overhead of maintenance and hardware depreciation.

Special Offers and Discounts

Many cloud providers and hardware vendors offer special promotions and discounts on the RTX 5000 (24 GB). These GPU offers can significantly reduce the overall cost, making it more affordable for AI practitioners and machine learning enthusiasts. It’s worth keeping an eye out for these deals, particularly during major sales events or when new models are released.

Comparing with H100 and GB200 Clusters

While the RTX 5000 (24 GB) is a robust option, it's also essential to consider other high-performance GPUs like the H100 and GB200 clusters. The H100 price and GB200 price can be higher, but they offer exceptional performance for demanding AI and machine learning tasks. For those requiring the absolute best GPU for AI, investing in an H100 cluster or GB200 cluster might be worthwhile. However, for many users, the RTX 5000 (24 GB) provides a balanced mix of performance and cost-efficiency.

In conclusion, the pricing for the RTX 5000 (24 GB) varies based on the model and the method of acquisition. Whether you opt for a direct purchase or prefer the flexibility of cloud GPUs on demand, understanding these options will help you make an informed decision tailored to your specific AI and machine learning needs.

RTX 5000 (24 GB) Benchmark Performance

How does the RTX 5000 (24 GB) perform in benchmarks?

The RTX 5000 (24 GB) GPU demonstrates outstanding benchmark performance, making it one of the best GPUs for AI, machine learning, and large model training. In our tests, it consistently outperformed previous generations, showing significant improvements in both computational power and efficiency.

Benchmark Scores and Analysis

When it comes to raw computational power, the RTX 5000 (24 GB) scored exceptionally high in our benchmark tests. It excels in tasks that require heavy data processing and complex computations, such as training and deploying machine learning models. This GPU is particularly effective for AI practitioners who need to access powerful GPUs on demand for their projects.

Training and Deploying ML Models

The RTX 5000 (24 GB) is optimized for training and deploying machine learning models. Its 24 GB of VRAM allows for the handling of large datasets and complex neural networks without significant slowdowns. Compared to the H100 cluster, the RTX 5000 offers a more cost-effective solution without compromising on performance, making it a great option for those looking at cloud GPU prices and GPU offers.

Performance in AI and Machine Learning

For AI builders and machine learning enthusiasts, the RTX 5000 (24 GB) is a next-gen GPU that meets the demands of modern AI workloads. It offers superior performance in both single and multi-GPU configurations, making it ideal for cloud on demand services and GB200 clusters. The GB200 price is competitive, but the RTX 5000 offers a viable alternative for those looking to maximize their investment in GPU for AI.

Cloud GPU Price and Availability

One of the key factors in choosing a GPU for AI is the cloud GPU price. The RTX 5000 (24 GB) provides an excellent balance of performance and cost, making it a popular choice for cloud services that offer GPUs on demand. Compared to the H100 price, the RTX 5000 offers a more affordable option without sacrificing significant performance, making it an attractive option for those looking to train, deploy, and serve ML models efficiently.

Frequently Asked Questions (FAQ) About the RTX 5000 (24 GB) GPU Graphics Card

What makes the RTX 5000 (24 GB) the best GPU for AI and machine learning?

The RTX 5000 (24 GB) is considered one of the best GPUs for AI and machine learning due to its high memory capacity, exceptional processing power, and advanced architecture. These features make it ideal for training large models and deploying them efficiently. Its 24 GB of VRAM allows for handling large datasets and complex computations without bottlenecks, which is crucial for AI practitioners working on sophisticated machine learning projects.

How does the RTX 5000 (24 GB) compare to the H100 in terms of performance and price?

While the H100 is known for its superior performance in high-end AI applications, the RTX 5000 (24 GB) offers a more cost-effective solution for many users. The H100 cluster is typically more expensive, making the RTX 5000 a more accessible option for those who need robust performance without the higher cloud GPU price associated with the H100. The RTX 5000 provides excellent performance for most AI and machine learning tasks, making it a versatile choice for various applications.

Can I access the RTX 5000 (24 GB) GPUs on demand for cloud-based AI projects?

Yes, many cloud service providers offer the RTX 5000 (24 GB) GPUs on demand. This allows AI practitioners to access powerful GPUs without the need to invest in expensive hardware. Cloud on demand services enable users to train, deploy, and serve ML models efficiently, providing flexibility and scalability for AI projects. This is particularly beneficial for those who require temporary access to high-performance GPUs for specific tasks.

What are the advantages of using the RTX 5000 (24 GB) for large model training?

The RTX 5000 (24 GB) excels in large model training due to its substantial memory capacity and computational power. The 24 GB of VRAM ensures that large datasets can be processed without running into memory limitations. Additionally, the advanced architecture of the RTX 5000 supports faster data throughput and parallel processing, which are essential for training complex models. These features make it a preferred choice for AI builders and researchers working on next-gen GPU projects.

How does the cloud GPU price for the RTX 5000 (24 GB) compare to other options?

The cloud GPU price for the RTX 5000 (24 GB) is generally more affordable compared to higher-end GPUs like the H100. This makes it an attractive option for AI practitioners and machine learning enthusiasts who need powerful GPUs without incurring high costs. Cloud providers often offer competitive pricing and various plans, allowing users to choose the best option that fits their budget and project requirements.

Is the RTX 5000 (24 GB) suitable for deploying and serving machine learning models?

Absolutely. The RTX 5000 (24 GB) is well-suited for deploying and serving machine learning models due to its high memory capacity and efficient processing capabilities. It can handle real-time inference and large-scale deployment scenarios with ease. This makes it a reliable choice for AI practitioners who need to serve ML models in production environments while ensuring high performance and reliability.

What are some benchmarks that highlight the performance of the RTX 5000 (24 GB) for AI applications?

Benchmarking the RTX 5000 (24 GB) reveals its strong performance in various AI applications, including image recognition, natural language processing, and data analysis. These benchmarks typically show significant improvements in training times and model accuracy compared to older GPU models. The RTX 5000's advanced architecture and ample VRAM contribute to its superior performance, making it a top choice for AI and machine learning tasks.

How does the RTX 5000 (24 GB) fit into a GB200 cluster for AI research?

The RTX 5000 (24 GB) can be effectively integrated into a GB200 cluster, providing a scalable and powerful solution for AI research. The GB200 cluster, equipped with multiple RTX 5000 GPUs, can handle extensive computations and large-scale data processing tasks. This setup is ideal for researchers who need to run complex simulations, train large models, and perform intensive data analysis. The GB200 price is competitive, making it a cost-effective option for building a high-performance AI research infrastructure.

Final Verdict on RTX 5000 (24 GB) GPU Graphics Card

The RTX 5000 (24 GB) GPU Graphics Card stands as a formidable contender in the realm of high-performance computing, particularly for AI practitioners and machine learning enthusiasts. With its impressive 24 GB of VRAM, it is well-suited for large model training and complex data processing tasks. For those seeking to access powerful GPUs on demand, the RTX 5000 offers a compelling balance of performance and price. Compared to the H100 price and the GB200 cluster, the RTX 5000 presents a more accessible option for both individual users and smaller enterprises. When benchmarking this GPU, it consistently delivers robust performance, making it one of the best GPUs for AI and machine learning applications available in the market today.

Strengths

- High VRAM capacity (24 GB) ideal for large model training and complex computations.

- Excellent performance for AI and machine learning tasks, making it a top choice for AI builders.

- Competitive pricing compared to other next-gen GPUs like the H100 cluster and GB200 cluster.

- Availability through cloud services, providing GPUs on demand for flexible and scalable usage.

- Strong benchmark results, ensuring reliable performance across various applications.

Areas of Improvement

- Higher initial investment compared to some other GPU offers, impacting cloud GPU price considerations.

- Limited availability in certain regions, affecting global accessibility for AI practitioners.

- Potential thermal management issues under heavy load, requiring efficient cooling solutions.

- Power consumption may be higher, necessitating robust power supply units for optimal performance.

- Documentation and support could be improved to better assist users in deployment and serving ML models.