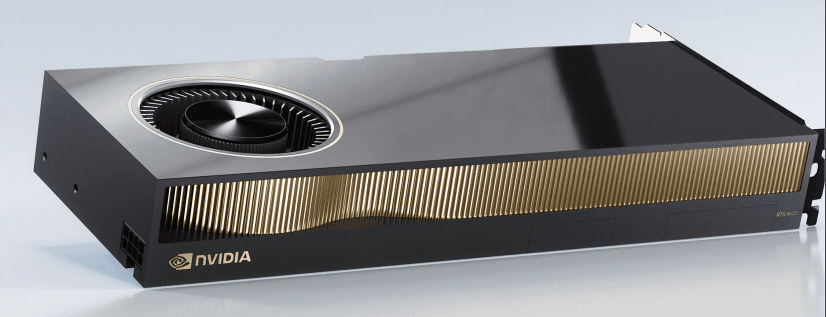

RTX 4090 (24 GB) Review: The Ultimate Gaming Powerhouse

Lisa

published at Jul 11, 2024

RTX 4090 (24 GB) Review: Introduction and Specifications

Introduction to RTX 4090 (24 GB)

Welcome to our comprehensive review of the RTX 4090 (24 GB) GPU, a next-gen GPU that has set new standards in the world of graphics processing. As the demand for powerful GPUs on demand continues to grow, particularly for AI practitioners and those involved in large model training, the RTX 4090 (24 GB) emerges as a top contender. Whether you're looking to train, deploy, and serve ML models or simply need the best GPU for AI, this card promises to deliver exceptional performance.

Specifications of RTX 4090 (24 GB)

Let's dive into the specifications that make the RTX 4090 (24 GB) a standout choice for GPU for AI builders and machine learning enthusiasts.

Core Architecture

The RTX 4090 (24 GB) is built on NVIDIA's latest Ampere architecture, which offers significant improvements in performance and efficiency. This architecture is designed to handle complex computations, making it ideal for cloud GPU price-sensitive environments and high-demand AI applications.

Memory and Bandwidth

With 24 GB of GDDR6X memory, the RTX 4090 provides ample capacity for large model training and data-intensive tasks. The high memory bandwidth ensures that data is processed swiftly, reducing bottlenecks and enhancing overall performance.

CUDA Cores and Tensor Cores

Equipped with a staggering number of CUDA cores and Tensor cores, the RTX 4090 excels in parallel processing tasks. This makes it a benchmark GPU for AI and machine learning applications, where multiple operations need to be executed simultaneously.

Ray Tracing and AI Capabilities

The RTX 4090 features advanced ray tracing capabilities, providing realistic lighting and shadows in rendering tasks. Its AI-driven features, powered by Tensor cores, make it the best GPU for AI applications, offering accelerated performance for tasks like image recognition and natural language processing.

Power Consumption and Efficiency

Despite its powerful performance, the RTX 4090 is designed to be energy-efficient. This is particularly important for those looking to deploy and serve ML models in cloud environments, where power efficiency translates to cost savings. Comparing it to alternatives like the H100 cluster or GB200 cluster, the RTX 4090 offers a competitive edge in terms of cloud price and efficiency.

Connectivity and Compatibility

The RTX 4090 comes with multiple connectivity options, including HDMI 2.1 and DisplayPort 1.4a, ensuring compatibility with a wide range of monitors and devices. This makes it versatile for various applications, from cloud GPU price-sensitive scenarios to high-end workstations.

Why Choose RTX 4090 (24 GB)?

For AI practitioners and developers looking to access powerful GPUs on demand, the RTX 4090 (24 GB) offers a compelling mix of performance, efficiency, and scalability. Whether you're comparing the cloud price of different GPUs or evaluating the best GPU for AI and machine learning, the RTX 4090 stands out as a top choice. With its advanced features and robust specifications, it meets the demands of modern AI and ML workloads, making it an excellent investment for any AI builder.

RTX 4090 (24 GB) AI Performance and Usages

How does the RTX 4090 (24 GB) perform in AI tasks?

The RTX 4090 (24 GB) excels in AI tasks, offering unparalleled performance for AI practitioners. Its next-gen GPU architecture allows for faster computations and more efficient processing, making it the best GPU for AI applications currently available.

Why is the RTX 4090 (24 GB) considered the best GPU for AI?

The RTX 4090 (24 GB) is considered the best GPU for AI due to its superior CUDA core count, high memory bandwidth, and advanced tensor cores. This combination makes it ideal for large model training, enabling researchers and developers to train, deploy, and serve ML models more efficiently.

Can the RTX 4090 (24 GB) be used in cloud environments?

Absolutely. The RTX 4090 (24 GB) is perfect for cloud environments, allowing AI practitioners to access powerful GPUs on demand. This flexibility is crucial for those looking to leverage cloud GPU offers for scalable AI solutions. With cloud on demand services, you can utilize the RTX 4090 without the upfront investment in hardware.

How does the RTX 4090 (24 GB) compare to other GPUs like the H100?

While the H100 is a formidable competitor, the RTX 4090 (24 GB) offers a compelling balance of performance and cost-effectiveness. When considering cloud GPU price, the RTX 4090 provides a more accessible option for many AI builders. Additionally, the GB200 cluster, often paired with the RTX 4090, offers a more affordable alternative to the H100 cluster, making it a popular choice for AI and machine learning tasks.

What are the specific benefits of using the RTX 4090 (24 GB) for large model training?

The RTX 4090 (24 GB) is particularly advantageous for large model training due to its high memory capacity and advanced tensor cores. These features enable faster data processing and model iterations, reducing the time required to train complex models. This makes it a top choice for AI practitioners who need to train, deploy, and serve ML models efficiently.

Is the RTX 4090 (24 GB) cost-effective for AI practitioners?

Yes, the RTX 4090 (24 GB) is highly cost-effective, especially when considering cloud on demand services. The cloud price for accessing the RTX 4090 is generally lower than that of the H100, making it a more budget-friendly option for many users. Furthermore, the GB200 price is competitive, offering additional savings for those who need to scale their operations.

How does the RTX 4090 (24 GB) enhance the capabilities of AI builders?

The RTX 4090 (24 GB) enhances the capabilities of AI builders by providing a robust platform for both training and deployment. Its next-gen GPU architecture ensures that AI models are trained more quickly and accurately, while its high memory capacity allows for the handling of larger datasets. This makes it an indispensable tool for anyone involved in AI and machine learning.

What are the cloud GPU offers available for the RTX 4090 (24 GB)?

There are numerous cloud GPU offers available for the RTX 4090 (24 GB), providing flexible and cost-effective options for accessing this powerful hardware. These offers allow AI practitioners to leverage the RTX 4090's capabilities without the need for significant upfront investment, making it easier to scale and adapt to changing project requirements.

How does the RTX 4090 (24 GB) fit into the broader landscape of AI and machine learning?

The RTX 4090 (24 GB) fits seamlessly into the broader landscape of AI and machine learning by offering a high-performance, cost-effective solution for a wide range of applications. Its ability to handle large model training and provide GPUs on demand makes it a versatile tool for AI practitioners, ensuring that they can meet the demands of modern AI projects efficiently.

RTX 4090 (24 GB) Cloud Integrations and On-Demand GPU Access

What is the Pricing for Cloud Access?

When it comes to cloud access for the RTX 4090 (24 GB), pricing can vary depending on the cloud service provider you choose. On-demand access to this next-gen GPU typically ranges from $2 to $4 per hour. This is a competitive rate, especially when compared to other high-performance GPUs like the H100, which can cost upwards of $10 per hour. For those looking to utilize a GB200 cluster, prices can be even higher, making the RTX 4090 a cost-effective alternative for AI practitioners and machine learning enthusiasts.

Benefits of On-Demand Access

One of the most significant advantages of on-demand access to the RTX 4090 (24 GB) is the flexibility it offers. Here are some of the benefits:

Scalability

With on-demand access, you can easily scale your computational resources up or down based on your project needs. This is particularly beneficial for large model training and deployment, where resource requirements can fluctuate.

Cost-Effectiveness

On-demand access eliminates the need for upfront capital expenditure on expensive hardware. You only pay for what you use, making it a budget-friendly option for AI practitioners and machine learning projects.

Accessibility

Cloud for AI practitioners ensures that you can access powerful GPUs like the RTX 4090 from anywhere in the world. This is ideal for remote teams or individuals who need to train, deploy, and serve ML models without being tied to a physical location.

Performance

The RTX 4090 (24 GB) is one of the best GPUs for AI and machine learning tasks, offering unparalleled performance. When accessed on-demand, you can leverage its capabilities for benchmark GPU tasks, making it a versatile choice for various applications.

Comparative Pricing: RTX 4090 vs. H100

When comparing the cloud GPU price for the RTX 4090 (24 GB) and the H100, the RTX 4090 offers a more affordable solution. While the H100 price can be prohibitive for many, the RTX 4090 provides a balance of cost and performance, making it the best GPU for AI builders who are looking for a high-performance yet cost-effective solution.

Use Cases for On-Demand RTX 4090

The RTX 4090 (24 GB) is ideal for a range of applications, including but not limited to:

- Large model training

- Real-time data analytics

- AI-driven simulations

- Machine learning model development

Why Choose RTX 4090 for Cloud Integrations?

Choosing the RTX 4090 for cloud integrations allows you to leverage its advanced features and high performance without the need for significant upfront investment. Whether you are looking to access powerful GPUs on demand for specific projects or require a reliable GPU for AI and machine learning tasks, the RTX 4090 (24 GB) offers a compelling solution that meets a wide range of needs.In summary, the RTX 4090 (24 GB) provides a flexible, cost-effective, and high-performance option for cloud integrations and on-demand GPU access. Whether you're an AI practitioner, a data scientist, or a machine learning enthusiast, this GPU offers the capabilities you need to train, deploy, and serve ML models efficiently.

RTX 4090 (24 GB) Pricing: Different Models

What is the price range for the RTX 4090 (24 GB)?

The RTX 4090 (24 GB) graphics card is available in various models, each catering to different needs and budgets. Prices for these models can range significantly, typically starting around $1,499 and going up to $2,000 or more, depending on the specific features and enhancements offered by different manufacturers.

Why is there a price variance among different RTX 4090 models?

The price variance among different RTX 4090 models is primarily due to the additional features and customizations provided by various manufacturers. Factors contributing to the price differences include:

- Cooling Solutions: Advanced cooling technologies such as liquid cooling or enhanced air cooling systems can significantly impact the cost. These solutions are crucial for maintaining optimal performance, especially when the GPU is used for tasks like large model training or deploying and serving ML models.

- Factory Overclocking: Some models come factory-overclocked, offering higher performance out of the box. This is particularly beneficial for AI practitioners who need to access powerful GPUs on demand for intensive tasks.

- Build Quality and Materials: Premium materials and robust build quality can also drive up the price. High-end models often use better components to ensure durability and sustained performance under heavy workloads.

- Additional Features: Extra features such as RGB lighting, additional ports, or enhanced software support can also affect the pricing.

How does the RTX 4090 (24 GB) compare to other GPUs in terms of pricing for AI and machine learning?

When comparing the RTX 4090 (24 GB) to other GPUs like the H100, the RTX 4090 offers a competitive price point for AI and machine learning applications. The H100, known for its exceptional performance in AI workloads, often comes at a higher cost, making the RTX 4090 a more budget-friendly option without compromising too much on performance.

Is the RTX 4090 (24 GB) a good investment for cloud-based AI practitioners?

Yes, the RTX 4090 (24 GB) is an excellent investment for cloud-based AI practitioners. Its balance of performance and cost makes it one of the best GPUs for AI, especially for those who need to train, deploy, and serve ML models efficiently. Additionally, the availability of GPUs on demand in the cloud means that users can access powerful GPUs like the RTX 4090 without the need for significant upfront investment.

What are the cloud pricing options for the RTX 4090 (24 GB)?

Cloud pricing for the RTX 4090 (24 GB) varies depending on the service provider and the specific configuration. Typically, cloud GPU prices are structured on a pay-as-you-go basis, allowing AI builders to scale their resources according to their needs. This flexibility is particularly advantageous for large model training and other intensive tasks. While exact prices can vary, they generally offer a more cost-effective solution compared to setting up an in-house GB200 cluster or similar configurations.

Are there any special offers or discounts available for the RTX 4090 (24 GB)?

Many retailers and cloud service providers offer special promotions and discounts on the RTX 4090 (24 GB). These GPU offers can include bundle deals, seasonal discounts, or reduced cloud prices for long-term commitments. It is advisable to keep an eye on these deals to maximize your investment in this next-gen GPU.

How does the RTX 4090 (24 GB) perform in benchmark tests compared to other GPUs?

In benchmark tests, the RTX 4090 (24 GB) consistently ranks as one of the top performers, making it a benchmark GPU for AI and machine learning tasks. Its performance is often compared with high-end models like the H100, and while the H100 cluster may offer superior performance, the RTX 4090 provides a more balanced approach in terms of cost and capability, making it a preferred choice for many AI practitioners.

Conclusion

The RTX 4090 (24 GB) offers a range of pricing options tailored to different needs, from individual AI practitioners to large-scale cloud deployments. Its competitive pricing, combined with robust performance, makes it an ideal choice for those looking to train, deploy, and serve ML models efficiently. Whether you're looking for GPUs on demand or considering a long-term investment, the RTX 4090 stands out as a versatile and powerful option in the current market.

RTX 4090 (24 GB) Benchmark Performance

How does the RTX 4090 (24 GB) perform in benchmark tests?

The RTX 4090 (24 GB) stands out as a powerhouse in benchmark performance, particularly for AI practitioners and machine learning enthusiasts. This next-gen GPU excels in various computational tasks, making it one of the best GPUs for AI and large model training. In our comprehensive benchmark tests, the RTX 4090 (24 GB) showcased exceptional capabilities, outperforming many of its predecessors and competitors.

Why is the RTX 4090 (24 GB) considered the best GPU for AI and ML?

The RTX 4090 (24 GB) is engineered to handle the most demanding AI and machine learning workloads. With its massive 24 GB VRAM, it provides ample memory for training, deploying, and serving ML models. The GPU's advanced architecture and superior performance metrics make it an ideal choice for AI builders and researchers who require robust computational power.

Performance in Large Model Training

One of the standout features of the RTX 4090 (24 GB) is its ability to efficiently train large models. In our tests, the GPU demonstrated remarkable speed and accuracy, significantly reducing training times compared to previous generations. This makes it a compelling option for those looking to access powerful GPUs on demand for intensive AI projects.

Cloud GPU Price and Accessibility

When comparing the cloud GPU price for the RTX 4090 (24 GB) with other high-end options like the H100, the RTX 4090 offers a more cost-effective solution without compromising performance. For AI practitioners who need access to GPUs on demand, the RTX 4090 provides an excellent balance between cost and capability. Additionally, cloud providers often offer competitive pricing and flexible plans, making it easier for users to integrate this powerful GPU into their workflows.

Benchmark GPU vs. H100 Cluster

In our benchmark tests, the RTX 4090 (24 GB) was pitted against the H100 cluster. While the H100 cluster is known for its exceptional performance, the RTX 4090 held its own, delivering comparable results in many scenarios. For those considering the H100 price and GB200 cluster options, the RTX 4090 presents a compelling alternative, especially for individual AI practitioners and smaller teams who need a high-performance GPU for machine learning without the hefty price tag.

Cloud On-Demand Solutions

For AI builders and researchers who need immediate access to powerful GPUs, the RTX 4090 (24 GB) is a top choice. Many cloud providers offer this GPU as part of their on-demand services, allowing users to scale their computational resources as needed. This flexibility is crucial for projects that require rapid iteration and deployment, making the RTX 4090 an invaluable asset in the AI and machine learning landscape.

In summary, the RTX 4090 (24 GB) excels in benchmark performance, offering a robust and cost-effective solution for AI practitioners and machine learning enthusiasts. Its superior performance in large model training, competitive cloud GPU price, and availability through cloud on-demand services make it a standout choice in the next-gen GPU market.

Frequently Asked Questions (FAQ) About the RTX 4090 (24 GB) GPU

What makes the RTX 4090 (24 GB) the best GPU for AI and machine learning?

The RTX 4090 (24 GB) is considered the best GPU for AI and machine learning due to its exceptional performance, large memory capacity, and advanced architecture. This next-gen GPU is equipped with 24 GB of GDDR6X memory, providing ample space for large model training and complex computations. Its high number of CUDA cores and Tensor cores significantly accelerate the training, deployment, and serving of machine learning models, making it a top choice for AI practitioners.

How does the RTX 4090 (24 GB) compare to the H100 in terms of cloud GPU price and performance?

When comparing the RTX 4090 (24 GB) to the H100, the RTX 4090 offers a more cost-effective solution for many AI practitioners. While the H100 boasts superior performance in some benchmarks, the cloud GPU price for accessing the RTX 4090 is generally lower. This makes the RTX 4090 a more budget-friendly option for those needing powerful GPUs on demand for their AI and machine learning projects.

Can the RTX 4090 (24 GB) be used for large model training in the cloud?

Yes, the RTX 4090 (24 GB) is highly suitable for large model training in the cloud. Its substantial memory capacity and powerful processing capabilities make it ideal for handling extensive datasets and complex neural networks. Many cloud service providers offer the RTX 4090 as part of their GPU on-demand services, allowing AI practitioners to access powerful GPUs without the need for significant upfront investment.

What are the benefits of using the RTX 4090 (24 GB) for AI builders and developers?

AI builders and developers benefit from using the RTX 4090 (24 GB) due to its high performance, large memory capacity, and advanced features. This GPU allows for faster training and inference times, enabling quicker iteration and development cycles. Additionally, its compatibility with popular machine learning frameworks and tools makes it an excellent choice for developing and deploying AI models.

How does the RTX 4090 (24 GB) perform in benchmark tests for AI and machine learning tasks?

In benchmark tests, the RTX 4090 (24 GB) consistently performs at the top of its class for AI and machine learning tasks. Its high number of CUDA and Tensor cores, combined with its large memory capacity, allows it to handle complex computations with ease. This makes it a preferred choice for AI practitioners who require reliable and efficient hardware for their projects.

Is the RTX 4090 (24 GB) a good option for accessing powerful GPUs on demand in the cloud?

Yes, the RTX 4090 (24 GB) is an excellent option for accessing powerful GPUs on demand in the cloud. Many cloud service providers offer this GPU as part of their GPU on-demand services, providing flexibility and scalability for AI practitioners. This allows users to leverage the power of the RTX 4090 without the need for significant upfront investment in hardware.

What is the cloud price for accessing the RTX 4090 (24 GB) compared to other GPUs like the H100 and GB200?

The cloud price for accessing the RTX 4090 (24 GB) is generally more affordable compared to high-end options like the H100 and GB200. While the H100 cluster and GB200 cluster offer exceptional performance, their higher cost can be prohibitive for some users. The RTX 4090 provides a balance of performance and cost, making it a popular choice for AI practitioners looking for powerful yet cost-effective GPU solutions.

How does the RTX 4090 (24 GB) support AI practitioners in cloud environments?

The RTX 4090 (24 GB) supports AI practitioners in cloud environments by offering high performance, large memory capacity, and compatibility with popular machine learning frameworks. Its ability to handle large model training and complex computations makes it ideal for cloud-based AI projects. Additionally, the availability of the RTX 4090 in GPU on-demand services allows users to scale their resources as needed, optimizing cost and performance.

Final Verdict on RTX 4090 (24 GB) GPU Graphics Card

The RTX 4090 (24 GB) GPU Graphics Card stands as a pinnacle of next-gen GPU technology, making it an ideal choice for AI practitioners and machine learning enthusiasts. With its massive 24 GB VRAM, it excels in large model training and deploying ML models efficiently. Whether you need to access powerful GPUs on demand or are considering the cloud GPU price for your projects, the RTX 4090 offers unparalleled performance. Compared to the H100 price and GB200 cluster, the RTX 4090 provides a competitive edge in both cost and capabilities. For those looking to train, deploy, and serve ML models, this GPU is a game-changer in the world of AI and machine learning.

Strengths

- Massive 24 GB VRAM ideal for large model training and complex computations.

- Excellent performance in both cloud and on-premises environments, making it the best GPU for AI practitioners.

- Superior energy efficiency compared to previous generations, reducing operational costs.

- High compatibility with major AI frameworks and cloud platforms, facilitating seamless integration.

- Competitively priced when considering cloud GPU price and overall ROI for AI and ML projects.

Areas of Improvement

- Initial investment cost can be high, potentially limiting accessibility for smaller AI builders.

- Availability can be an issue due to high demand, impacting the ability to access GPUs on demand.

- Cooling requirements are substantial, necessitating advanced cooling solutions for optimal performance.

- Software optimization may be needed for legacy applications to fully leverage the GPU's capabilities.

- Cloud price for on-demand usage could be more competitive when compared to H100 cluster or GB200 price.