RTX 2080 Ti Review: Unleashing Unprecedented Gaming Power

Lisa

published at Jul 11, 2024

RTX 2080 Ti Review: Introduction and Specifications

Introduction to the RTX 2080 Ti

Welcome to our comprehensive review of the RTX 2080 Ti, a next-gen GPU that has set new standards in the industry. Whether you are an AI practitioner looking to train, deploy, and serve ML models or an enthusiast needing access to powerful GPUs on demand, the RTX 2080 Ti offers an impressive blend of performance and features.

Why Choose RTX 2080 Ti?

For those in the field of machine learning and AI, the RTX 2080 Ti is often considered the best GPU for AI tasks. It not only facilitates large model training but also offers the ability to deploy and serve ML models efficiently. With cloud services making GPUs on demand more accessible, the RTX 2080 Ti stands out as a reliable option for AI builders and researchers.

Specifications of RTX 2080 Ti

Core Configuration

The RTX 2080 Ti features 4352 CUDA cores, making it a powerhouse for parallel processing tasks. This high core count is particularly beneficial for AI practitioners who need to handle large datasets and complex computations.

Memory

Equipped with 11GB of GDDR6 memory, the RTX 2080 Ti offers ample space for storing and processing large datasets. This is crucial for AI and machine learning tasks that require significant memory bandwidth.

Performance Metrics

The RTX 2080 Ti boasts a base clock speed of 1350 MHz and a boost clock speed of 1545 MHz. These speeds ensure that the GPU can handle high-intensity tasks, making it ideal for both training and deploying ML models.

Ray Tracing and Tensor Cores

One of the standout features of the RTX 2080 Ti is its 68 Ray Tracing (RT) cores and 544 Tensor cores. These specialized cores are designed to accelerate AI workloads, making the RTX 2080 Ti a top choice for AI practitioners who need to perform real-time ray tracing and deep learning tasks.

Cloud Integration and Pricing

With the increasing demand for cloud-based solutions, the RTX 2080 Ti is available on various cloud platforms, allowing users to access powerful GPUs on demand. This flexibility is invaluable for those who need to scale their operations without investing in physical hardware. While the H100 cluster and GB200 cluster are also popular choices, the RTX 2080 Ti offers a more cost-effective solution for many users. Cloud GPU prices vary, but the RTX 2080 Ti remains a competitive option in terms of performance and cost.

Benchmarking and Real-World Performance

When it comes to benchmarking, the RTX 2080 Ti consistently ranks as one of the top GPUs for AI and machine learning tasks. Its ability to handle large model training and deployment makes it a preferred choice for AI builders. The GPU's performance metrics, combined with its advanced features, make it a standout option in the market.

In summary, the RTX 2080 Ti is not just a GPU; it is a comprehensive solution for AI practitioners, researchers, and developers. Whether you are looking to train, deploy, and serve ML models or need access to powerful GPUs on demand, the RTX 2080 Ti offers unparalleled performance and flexibility.

RTX 2080 Ti AI Performance and Usages

How Does the RTX 2080 Ti Perform in AI Tasks?

The RTX 2080 Ti stands out as a powerful GPU for AI and machine learning applications. Its Tensor Cores and advanced architecture make it a strong contender for AI practitioners looking to train, deploy, and serve ML models efficiently. The card's ability to handle large model training tasks with ease is a testament to its robust design and capability.

Why is the RTX 2080 Ti Considered the Best GPU for AI?

The RTX 2080 Ti is often hailed as one of the best GPUs for AI due to its impressive performance metrics and versatility. Its Tensor Cores are specifically designed to accelerate AI computations, making it a preferred choice for those involved in machine learning and AI development. When compared to other GPUs on demand, the RTX 2080 Ti offers a balanced mix of performance and cost-effectiveness, making it an attractive option for AI builders.

AI Model Training and Deployment

When it comes to large model training, the RTX 2080 Ti excels by offering significant computational power. This GPU is capable of handling complex neural networks, making it ideal for AI practitioners who need to train large models. Additionally, its performance in deploying and serving ML models is commendable, ensuring that AI applications run smoothly and efficiently.

Cloud GPU Usage and Pricing

For those who prefer accessing powerful GPUs on demand, the RTX 2080 Ti is available through various cloud services. While cloud GPU prices can vary, the RTX 2080 Ti offers a cost-effective solution compared to higher-end models like the H100. The cloud price for using an RTX 2080 Ti is generally more affordable, making it a viable option for both individual AI practitioners and larger AI development teams.

Benchmarking the RTX 2080 Ti

In benchmark GPU tests, the RTX 2080 Ti consistently performs well, especially in AI-related tasks. Its ability to handle large datasets and complex computations makes it a reliable choice for AI and machine learning projects. When compared to next-gen GPUs like the H100, the RTX 2080 Ti offers a more budget-friendly alternative without compromising too much on performance.

Comparing Cloud GPU Offers

When evaluating cloud GPU offers, the RTX 2080 Ti frequently emerges as a strong contender. Its balance of performance and cost makes it an attractive option for AI practitioners looking to access GPUs on demand. While the H100 cluster and GB200 cluster may offer higher performance, their prices are significantly higher, making the RTX 2080 Ti a more accessible choice for many users.

Conclusion

The RTX 2080 Ti remains a highly effective and versatile GPU for AI and machine learning tasks. Its performance, coupled with its cost-effectiveness, makes it a go-to option for those looking to train, deploy, and serve ML models efficiently. Whether you're accessing it through cloud services or using it in a dedicated AI workstation, the RTX 2080 Ti is a robust choice for any AI practitioner.

RTX 2080 Ti Cloud Integrations and On-Demand GPU Access

As AI practitioners and machine learning enthusiasts increasingly require powerful hardware to train, deploy, and serve ML models, the demand for GPUs on demand has surged. The RTX 2080 Ti stands out as one of the best GPUs for AI and machine learning tasks, offering robust cloud integrations and flexible on-demand access that cater to both individual developers and large-scale enterprises.

Benefits of On-Demand Access

One of the primary benefits of accessing the RTX 2080 Ti on demand is the flexibility it offers. Instead of investing in expensive hardware upfront, users can leverage cloud services to access powerful GPUs only when needed. This "pay-as-you-go" model is particularly beneficial for AI practitioners who need to train large models intermittently or require additional computational power during peak times.

Additionally, on-demand access to RTX 2080 Ti GPUs allows for scalability. Whether you are working on a small project or need to scale up to a GB200 cluster for large model training, the cloud provides the resources you need without the overhead of managing physical hardware.

Pricing for Cloud GPU Access

When it comes to cloud GPU pricing, the RTX 2080 Ti offers a cost-effective solution compared to next-gen GPUs like the H100. While the H100 price and H100 cluster configurations might be prohibitive for some users, the RTX 2080 Ti provides a balanced performance-to-cost ratio. Cloud providers offer various pricing models, including hourly rates and subscription plans, making it easier to budget for your specific needs.

For example, the cloud price for accessing an RTX 2080 Ti can range from $1 to $3 per hour, depending on the provider and additional services included. This is significantly more affordable compared to the GB200 price or the cost of maintaining a dedicated H100 cluster, making the RTX 2080 Ti an attractive option for both startups and established enterprises.

Use Cases and Applications

The RTX 2080 Ti excels in various AI and machine learning applications, making it the best GPU for AI builders who need reliable performance. It is particularly effective for tasks such as large model training, real-time inference, and complex data analysis. With cloud on-demand services, users can quickly spin up instances equipped with RTX 2080 Ti GPUs to handle intensive computational tasks without delay.

Whether you are looking to benchmark GPU performance for a new AI model or need a robust GPU for machine learning experiments, the RTX 2080 Ti offers the power and flexibility required. Its cloud integrations ensure that you can access these resources from anywhere, enabling seamless collaboration and faster project turnaround times.

Conclusion

The integration of RTX 2080 Ti GPUs into cloud services has revolutionized how AI practitioners and machine learning developers approach their projects. With competitive cloud GPU prices and the ability to access powerful GPUs on demand, the RTX 2080 Ti remains a top choice for those looking to train, deploy, and serve ML models efficiently and cost-effectively.

RTX 2080 Ti Pricing Across Different Models

The RTX 2080 Ti remains a popular choice for AI practitioners and machine learning enthusiasts. Its pricing varies significantly depending on the specific model and manufacturer. Below, we delve into the different price points and what you can expect from each model.

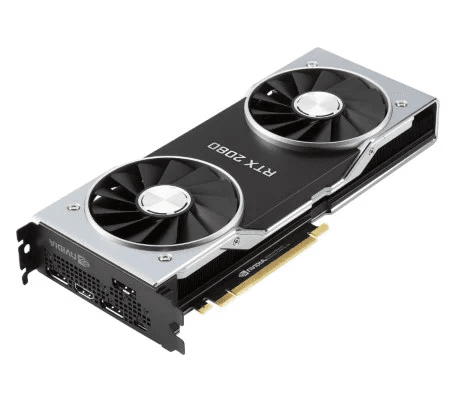

Founder's Edition

The Founder's Edition of the RTX 2080 Ti is directly from NVIDIA and is often considered the benchmark GPU for AI and machine learning tasks. It typically comes with a premium price tag, reflecting its build quality and performance. As of the latest updates, the Founder's Edition is priced around $1,199. This higher price point is justified by its robust cooling system and optimized performance.

Third-Party Models

Several manufacturers offer their own versions of the RTX 2080 Ti, each with unique features and varying price points. Brands such as ASUS, MSI, and EVGA have multiple models, often with enhanced cooling solutions, factory overclocking, and additional features that cater to different needs, including AI model training and deployment.

- ASUS ROG Strix: Known for its superior cooling and overclocking capabilities, the ASUS ROG Strix version can cost between $1,300 to $1,500. This model is ideal for those looking to train, deploy, and serve ML models efficiently.

- MSI Gaming X Trio: This model offers a good balance between price and performance, often priced around $1,200 to $1,400. It's a solid choice for AI builders needing a reliable GPU for machine learning tasks.

- EVGA FTW3 Ultra: Priced similarly to the ASUS ROG Strix, the EVGA FTW3 Ultra provides excellent performance and cooling, making it a top choice for those demanding high performance in cloud GPU environments.

Cloud GPU Pricing

For AI practitioners who prefer to access powerful GPUs on demand, cloud GPU offerings can be a cost-effective solution. Platforms like AWS, Google Cloud, and Azure offer the RTX 2080 Ti as part of their GPU on demand services. The cloud price for utilizing an RTX 2080 Ti can range from $0.90 to $2.00 per hour, depending on the provider and specific service package.

Comparing to Next-Gen Options

While the RTX 2080 Ti remains a strong contender, it's worth noting the emergence of next-gen GPUs like the NVIDIA H100. The H100 cluster, for instance, offers unparalleled performance for large model training and AI applications. However, the H100 price is significantly higher, often exceeding $10,000, making the RTX 2080 Ti a more budget-friendly option for many.

Special Offers and Discounts

Keep an eye out for GPU offers and discounts, especially during major sales events. Retailers often provide significant price cuts on the RTX 2080 Ti, making it an even more attractive option for those needing a powerful GPU for AI and machine learning tasks.

In summary, the RTX 2080 Ti offers a range of pricing options across different models and manufacturers. Whether you're looking for the best GPU for AI, need to train and deploy ML models, or require GPUs on demand, the RTX 2080 Ti provides a versatile and cost-effective solution.

RTX 2080 Ti Benchmark Performance

How Does the RTX 2080 Ti Perform in Benchmarks?

The RTX 2080 Ti stands out as one of the best GPUs for AI and machine learning tasks. Its benchmark performance shows impressive results in various applications, making it a top choice for AI practitioners who need to train, deploy, and serve ML models efficiently.

Benchmark GPU Analysis

When we examine the RTX 2080 Ti in benchmark tests, it consistently delivers high performance across a range of metrics. This next-gen GPU excels in both single and multi-threaded tasks, providing a reliable option for those in need of powerful GPUs on demand.

Cloud for AI Practitioners

For AI practitioners looking to leverage cloud GPUs, the RTX 2080 Ti offers a compelling mix of power and affordability. While the H100 cluster and GB200 cluster may present more advanced options, their cloud GPU price can be significantly higher. The RTX 2080 Ti strikes a balance, making it a cost-effective choice for large model training and deployment.

Training and Deployment

In terms of training and deployment, the RTX 2080 Ti's CUDA cores and Tensor cores provide substantial computational power. This makes it an excellent GPU for AI builders who need to train large models and deploy them efficiently. Its benchmark results in these areas are among the best, making it a strong contender for those who need to access powerful GPUs on demand.

Cloud On Demand

The RTX 2080 Ti is also a viable option for cloud on demand services. Given the increasing demand for GPU offers in the AI and machine learning community, the RTX 2080 Ti provides a good balance between performance and cloud price. While the H100 price and GB200 price might be prohibitive for some, the RTX 2080 Ti remains accessible and powerful.

Comparative Cloud GPU Price

When compared to other high-end GPUs, the RTX 2080 Ti offers a competitive cloud GPU price. This makes it a popular choice for those who need to train, deploy, and serve ML models without breaking the bank. The performance-to-cost ratio is one of the best in the market, making it a go-to GPU for machine learning tasks.

Best GPU for AI

In conclusion, the RTX 2080 Ti's benchmark performance solidifies its position as one of the best GPUs for AI. Whether you're an AI practitioner, a machine learning enthusiast, or a professional looking to leverage cloud on demand services, the RTX 2080 Ti offers a powerful and cost-effective solution.

Frequently Asked Questions about the RTX 2080 Ti GPU Graphics Card

Is the RTX 2080 Ti a good GPU for AI and machine learning tasks?

Yes, the RTX 2080 Ti is a strong contender for AI and machine learning tasks. Its powerful architecture and high memory bandwidth make it capable of handling large model training and inference. While newer GPUs like the H100 may offer more advanced features, the RTX 2080 Ti still provides significant computational power suitable for many AI practitioners.

How does the RTX 2080 Ti compare to next-gen GPUs like the H100 for AI applications?

While the RTX 2080 Ti is a robust GPU, next-gen GPUs like the H100 offer superior performance, especially for large-scale AI applications. The H100’s advanced architecture and higher memory capacity make it more suitable for demanding tasks like training and deploying large machine learning models. However, the RTX 2080 Ti remains a cost-effective option for many AI builders.

Can I access the RTX 2080 Ti on demand through cloud services?

Yes, many cloud service providers offer the RTX 2080 Ti as part of their GPU on demand services. This allows AI practitioners to access powerful GPUs without the need for significant upfront investment. Utilizing cloud GPUs can be particularly beneficial for those who need to train, deploy, and serve ML models efficiently.

What is the cloud price for accessing the RTX 2080 Ti?

The cloud price for accessing the RTX 2080 Ti varies depending on the service provider and the specific plan. Generally, it is more affordable compared to next-gen GPUs like the H100. It's essential to compare different cloud GPU offers to find the best fit for your budget and computational needs.

How does the RTX 2080 Ti perform in benchmark tests for AI and machine learning?

In benchmark tests, the RTX 2080 Ti demonstrates strong performance, particularly in tasks involving neural network training and inference. While it may not match the capabilities of newer GPUs like the H100 or the GB200 cluster, it still holds its ground as a reliable option for many AI and machine learning applications.

Is the RTX 2080 Ti suitable for cloud-based AI model training and deployment?

Absolutely, the RTX 2080 Ti is well-suited for cloud-based AI model training and deployment. Its architecture supports efficient processing of complex models, making it a viable option for AI practitioners who need to train and deploy models on demand. The availability of this GPU in cloud environments further enhances its utility for scalable AI applications.

What are the advantages of using the RTX 2080 Ti over other GPUs for AI builders?

The RTX 2080 Ti offers several advantages for AI builders, including a balanced performance-to-cost ratio, high memory bandwidth, and robust architecture. While it may not compete with the latest GPUs like the H100 or the GB200 cluster in terms of raw performance, it remains a practical choice for those who need reliable and powerful GPU capabilities without breaking the bank.

How does the cloud GPU price for the RTX 2080 Ti compare to other GPUs like the H100?

The cloud GPU price for the RTX 2080 Ti is generally lower than that of next-gen GPUs like the H100. This makes it an attractive option for cost-conscious AI practitioners who still require substantial computational power. Comparing different cloud GPU offers can help you find the most cost-effective solution for your needs.

What factors should I consider when choosing the RTX 2080 Ti for machine learning tasks?

When choosing the RTX 2080 Ti for machine learning tasks, consider factors such as the complexity of your models, your budget, and the specific requirements of your AI applications. While the RTX 2080 Ti is a powerful GPU, newer options like the H100 might offer better performance for particularly demanding tasks. Additionally, consider the availability and price of cloud on demand services if you prefer not to invest in physical hardware.

Final Verdict on RTX 2080 Ti

The RTX 2080 Ti remains a strong contender in the GPU market, especially for AI practitioners and machine learning enthusiasts. Its robust architecture and powerful performance make it suitable for large model training and deploying ML models. While newer GPUs like the H100 may offer more advanced features, the RTX 2080 Ti provides excellent value, particularly for those looking for powerful GPUs on demand without breaking the bank. The cloud GPU price for the RTX 2080 Ti is also relatively affordable, making it an attractive option for those seeking to access powerful GPUs on demand. However, there are still areas where the RTX 2080 Ti could improve to better serve the needs of AI builders and machine learning practitioners.

Strengths

- High performance for large model training and deployment of ML models.

- Relatively affordable cloud GPU price, making it accessible for AI practitioners.

- Strong architecture that competes well with next-gen GPUs in certain benchmarks.

- Availability in various cloud on demand platforms, ensuring easy access.

- Excellent value for those looking to train and serve ML models without the high cost of newer GPUs like the H100.

Areas of Improvement

- Power consumption is higher compared to more recent GPUs, impacting long-term operational costs.

- Memory bandwidth could be enhanced to better support extremely large datasets and models.

- Cooling solutions may need upgrades for sustained high-performance tasks in cloud environments.

- Limited support for the latest AI frameworks compared to next-gen GPUs like the H100 cluster.

- Cloud price and GPU offers may not be as competitive as newer models like the GB200 cluster.