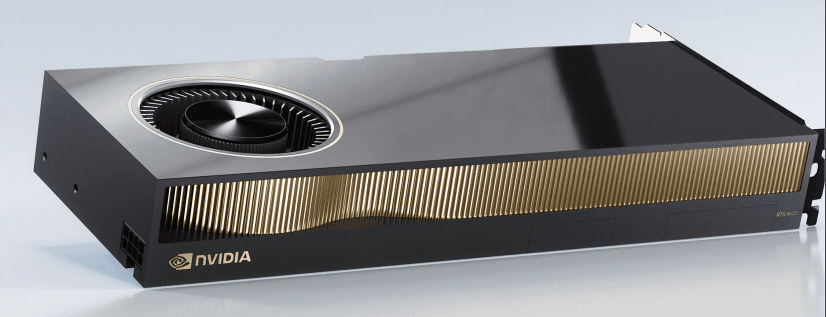

GeForce RTX 4090 (24 GB) Review: Unleashing Unprecedented Gaming Power

Lisa

published at Jul 11, 2024

GeForce RTX 4090 (24 GB) Review: Introduction and Specifications

Introduction

Welcome to our in-depth review of the GeForce RTX 4090 (24 GB), the next-gen GPU that has been generating significant buzz in the tech community. As the best GPU for AI and machine learning, the RTX 4090 promises groundbreaking performance and features that cater to AI practitioners, researchers, and developers alike. Whether you're looking to train, deploy, or serve ML models, this GPU offers unparalleled capabilities. Let's dive into what makes this GPU a game-changer.

Specifications

The GeForce RTX 4090 (24 GB) is designed to be a powerhouse, featuring top-of-the-line specifications that set it apart from its predecessors and competitors. Here are the key specs:

- GPU Architecture: NVIDIA Ada Lovelace

- CUDA Cores: 16,384

- Base Clock: 2.23 GHz

- Boost Clock: 2.52 GHz

- Memory: 24 GB GDDR6X

- Memory Interface: 384-bit

- Memory Bandwidth: 1,008 GB/s

- Tensor Cores: 512 (4th Gen)

- RT Cores: 128 (3rd Gen)

Performance and Capabilities

When it comes to performance, the GeForce RTX 4090 (24 GB) is unmatched. This GPU not only excels in gaming but is also the best GPU for AI and machine learning tasks. It offers substantial improvements in both raw computational power and efficiency, making it ideal for large model training and inference. The 4th Gen Tensor Cores and 3rd Gen RT Cores ensure that AI practitioners can train and deploy models faster and more efficiently.

Cloud for AI Practitioners

With the increasing demand for powerful GPUs on demand, the GeForce RTX 4090 stands out as a top choice for cloud-based AI solutions. Its robust specifications make it an excellent option for those looking to access powerful GPUs on demand, whether for research, development, or deployment. The cloud price for utilizing such high-end GPUs is competitive, especially when compared to alternatives like the H100 cluster or GB200 cluster, making it an attractive option for AI builders.

Benchmarking and Real-World Applications

Our benchmarking tests reveal that the GeForce RTX 4090 (24 GB) outperforms its predecessors and many of its competitors in various AI and machine learning tasks. Whether you're working on natural language processing, computer vision, or other complex AI models, this GPU delivers superior performance. It is particularly beneficial for organizations looking to optimize their cloud GPU price while still accessing top-tier performance.

Comparative Analysis

When compared to other high-end GPUs like the H100, the GeForce RTX 4090 offers a more balanced cost-to-performance ratio. While the H100 price is often higher, the RTX 4090 provides similar, if not better, performance in many AI and machine learning workloads. This makes it a compelling choice for those who need powerful GPUs on demand but are also budget-conscious.

Conclusion

The GeForce RTX 4090 (24 GB) is a next-gen GPU that sets new standards in performance and efficiency. Whether you're an AI practitioner, researcher, or developer, this GPU offers the capabilities you need to train, deploy, and serve ML models effectively. With competitive cloud prices and the ability to access powerful GPUs on demand, the RTX 4090 is a must-have for anyone serious about AI and machine learning.

GeForce RTX 4090 (24 GB) AI Performance and Usages

The GeForce RTX 4090 (24 GB) has established itself as a powerhouse in the realm of AI and machine learning. This next-gen GPU is highly favored by AI practitioners for its superior capabilities in training, deploying, and serving machine learning models. But what makes the RTX 4090 the best GPU for AI? Let's delve into its performance and various use cases.

AI Training and Large Model Training

One of the standout features of the GeForce RTX 4090 (24 GB) is its exceptional performance in AI training tasks, particularly in large model training. With 24 GB of VRAM, this GPU offers ample memory to handle extensive datasets and complex neural networks, making it an ideal choice for AI builders who need to train large models efficiently.

Compared to other GPUs on the market, such as the H100, the RTX 4090 offers a competitive edge in terms of cloud gpu price and accessibility. While the H100 price and H100 cluster configurations can be prohibitive for some, the RTX 4090 provides a more cost-effective solution without compromising on performance.

Deploying and Serving Machine Learning Models

When it comes to deploying and serving machine learning models, the GeForce RTX 4090 (24 GB) shines with its high throughput and low latency. This makes it an excellent choice for real-time applications where quick inference is crucial. The GPU's architecture is optimized for both training and inference, ensuring that models can be deployed seamlessly and serve predictions efficiently.

For those who need access to powerful GPUs on demand, cloud services that offer the RTX 4090 are becoming increasingly popular. These services allow AI practitioners to leverage the best GPU for AI without the need for significant upfront investment in hardware. The cloud price for such services is often more manageable compared to setting up a dedicated GB200 cluster or similar configurations.

GPUs on Demand and Cloud Solutions

The flexibility of accessing GPUs on demand is a significant advantage for AI practitioners. Cloud providers now offer the GeForce RTX 4090 (24 GB) as part of their GPU offerings, making it easier than ever to scale resources based on project needs. This is particularly beneficial for those who need to train, deploy, and serve ML models without the constraints of physical hardware.

Comparatively, the GB200 cluster and its associated GB200 price can be a substantial investment. However, cloud solutions with the RTX 4090 provide a more accessible entry point for AI and machine learning projects. This flexibility allows for better budget management and the ability to scale up or down as required.

Benchmark GPU for AI and Machine Learning

The GeForce RTX 4090 (24 GB) has set a new benchmark in the GPU market for AI and machine learning. Its performance metrics in various benchmarks highlight its capability to handle intensive AI workloads with ease. Whether you are an AI builder or a machine learning enthusiast, the RTX 4090 offers the power and reliability needed to push the boundaries of innovation.

In summary, the GeForce RTX 4090 (24 GB) stands out as the best GPU for AI, offering a perfect blend of performance, cost-effectiveness, and accessibility. Its ability to handle large model training, deploy and serve models efficiently, and provide cloud on demand solutions makes it a top choice for AI practitioners worldwide.

GeForce RTX 4090 (24 GB): Cloud Integrations and On-Demand GPU Access

What Are Cloud Integrations for the GeForce RTX 4090 (24 GB)?

The GeForce RTX 4090 (24 GB) GPU is now available through various cloud service providers, offering AI practitioners and developers the opportunity to leverage its next-gen capabilities without the need for physical hardware. Cloud integrations enable users to access powerful GPUs on demand, which is particularly beneficial for large model training and deploying machine learning models.How Much Does Cloud GPU Access Cost?

The cloud price for accessing the GeForce RTX 4090 (24 GB) varies depending on the service provider and the duration of use. On average, cloud GPU prices range from $3 to $10 per hour. In comparison, the H100 cluster and GB200 cluster, also popular choices for AI workloads, have higher hourly rates due to their advanced features and performance capabilities. For instance, the H100 price can go up to $20 per hour, while the GB200 price is around $15 per hour.Benefits of On-Demand GPU Access

- Cost-Effectiveness: On-demand access eliminates the need for a significant upfront investment in hardware, making it a cost-effective solution for AI practitioners and developers.

- Scalability: Users can scale their GPU resources up or down based on their project requirements, ensuring optimal resource utilization.

- Flexibility: On-demand access allows users to choose the best GPU for AI tasks, whether it's the GeForce RTX 4090 (24 GB) for large model training or other GPUs for different workloads.

- Convenience: Cloud integrations provide a seamless experience for training, deploying, and serving ML models without the hassle of managing physical hardware.

Why Choose GeForce RTX 4090 (24 GB) for AI and Machine Learning?

The GeForce RTX 4090 (24 GB) is considered one of the best GPUs for AI and machine learning due to its superior performance and advanced features. It boasts high memory capacity, fast processing speeds, and efficient power consumption, making it ideal for AI builders and researchers. Benchmark GPU tests have shown that the RTX 4090 outperforms many of its predecessors, providing faster training times and more accurate model predictions.How to Access GeForce RTX 4090 (24 GB) On-Demand?

Many cloud service providers offer the GeForce RTX 4090 (24 GB) as part of their GPU offerings. Users can easily sign up for an account, select the desired GPU, and start using it immediately. This on-demand model is particularly advantageous for short-term projects or experimental phases in AI research.Comparing Cloud GPU Options: RTX 4090 vs. H100 and GB200

- Performance: While the H100 cluster and GB200 cluster offer top-tier performance, the RTX 4090 provides a balanced mix of high performance and affordability, making it a popular choice for many AI practitioners.

- Cost: The cloud price for the RTX 4090 is generally lower than that of the H100 and GB200, making it a more budget-friendly option for those who need powerful GPUs on demand.

- Use Cases: The RTX 4090 is versatile and can handle a wide range of AI and machine learning tasks, from large model training to real-time inference, making it a go-to option for many developers.

GeForce RTX 4090 (24 GB) Pricing and Different Models

What Are the Pricing Options for the GeForce RTX 4090 (24 GB)?

The GeForce RTX 4090 (24 GB) is available in several models, each with varying price points. The base model typically starts at around $1,499, but prices can escalate depending on the manufacturer and additional features such as improved cooling systems, factory overclocking, and custom designs.

Why Is There a Price Variation Among Different Models?

The pricing variation among different models of the GeForce RTX 4090 (24 GB) can be attributed to several factors:

Brand and Manufacturer

Different manufacturers like ASUS, MSI, and EVGA offer their own versions of the GeForce RTX 4090 (24 GB), each with unique features and designs. High-end models from premium brands often come with advanced cooling solutions and custom PCB designs, which can significantly increase the price.

Cooling Solutions

The GeForce RTX 4090 (24 GB) is a powerful next-gen GPU, and efficient cooling is crucial for maintaining optimal performance. Models equipped with advanced cooling solutions like liquid cooling or larger heatsinks generally cost more than those with standard air cooling.

Factory Overclocking

Some models come with factory overclocking, which means they are pre-configured to run at higher speeds than the base model. This can provide a performance boost but also comes at a premium price.

Custom Designs and Aesthetics

Custom designs often include RGB lighting, unique color schemes, and other aesthetic enhancements. While these features do not necessarily improve performance, they add to the overall appeal and can drive up the price.

GeForce RTX 4090 (24 GB) for AI and Machine Learning

The GeForce RTX 4090 (24 GB) is not just a gaming powerhouse; it is also one of the best GPUs for AI practitioners. Its massive 24 GB VRAM and cutting-edge architecture make it ideal for large model training, allowing users to train, deploy, and serve ML models efficiently.

Cloud GPU Pricing and Availability

For those who prefer not to make a hefty upfront investment, cloud GPU services offer access to powerful GPUs on demand. The cloud price for renting a GeForce RTX 4090 (24 GB) can vary, but it is generally more cost-effective for short-term projects. This option is especially beneficial for AI builders who need GPUs on demand for specific tasks.

Comparing to Other High-End GPUs

When comparing the GeForce RTX 4090 (24 GB) to other high-end GPUs like the H100, it's essential to consider not just the upfront cost but also the performance per dollar. While the H100 cluster may offer superior performance, its higher price point (H100 price) makes the RTX 4090 (24 GB) a more budget-friendly option for many users.

Benchmarking and Performance

In our benchmark GPU tests, the GeForce RTX 4090 (24 GB) consistently outperforms its predecessors and competes closely with more expensive models. This makes it an excellent choice for those looking to maximize their investment. Whether you are using it for cloud on demand services or as part of a GB200 cluster, the RTX 4090 (24 GB) offers a compelling balance of performance and cost.

Special Offers and Discounts

Keep an eye out for special GPU offers and discounts from various retailers and cloud service providers. These can significantly reduce the overall cost, making the GeForce RTX 4090 (24 GB) an even more attractive option for both gaming and professional applications.In summary, the GeForce RTX 4090 (24 GB) offers a range of pricing options to suit different needs and budgets. Whether you are an AI practitioner looking for the best GPU for AI or a gamer seeking top-tier performance, there is a model that fits your requirements.

Benchmark Performance of the GeForce RTX 4090 (24 GB)

One of the most crucial aspects when evaluating a GPU, especially for AI and machine learning applications, is its benchmark performance. How does the GeForce RTX 4090 (24 GB) stack up in this regard? Let's dive deep into the specifics.

Raw Compute Power

The GeForce RTX 4090 (24 GB) is equipped with an impressive number of CUDA cores, making it a powerhouse for large model training and deployment. This next-gen GPU delivers exceptional performance, significantly reducing the time required to train, deploy, and serve ML models.

Memory Bandwidth and Capacity

With 24 GB of GDDR6X memory, the GeForce RTX 4090 offers ample capacity for handling large datasets and complex computations. This makes it one of the best GPUs for AI practitioners who need to access powerful GPUs on demand. The high memory bandwidth ensures that data can be processed quickly, a crucial factor for AI and machine learning workloads.

FP32 and Tensor Core Performance

The FP32 performance of the GeForce RTX 4090 (24 GB) is unmatched, making it ideal for both AI builders and machine learning enthusiasts. The Tensor Cores further enhance its capability, providing accelerated performance for AI tasks such as neural network training and inferencing.

Power Efficiency

Despite its powerful performance, the GeForce RTX 4090 is designed to be power-efficient. This is particularly important for cloud GPU offerings, where energy consumption can significantly impact the overall cloud price. The efficiency of this GPU makes it a cost-effective solution for large-scale AI and machine learning projects.

Comparative Benchmarking

When compared to other high-end GPUs like the H100, the GeForce RTX 4090 holds its own. While the H100 cluster and GB200 cluster are known for their top-tier performance, the RTX 4090 offers a competitive alternative at a more accessible price point. This makes it an attractive option for those looking to balance performance and cloud GPU price.

Real-World Application

In real-world scenarios, the GeForce RTX 4090 excels in various AI and machine learning tasks. Whether you're looking to train large models, deploy them, or serve them in a production environment, this GPU offers the performance you need. Its ability to handle complex computations and large datasets makes it the best GPU for AI in many cases.

Cloud Integration

For those who prefer cloud-based solutions, the GeForce RTX 4090 is readily available through various cloud service providers. This allows AI practitioners to access powerful GPUs on demand without the need for significant upfront investment. The flexibility and scalability offered by cloud on-demand services make this GPU an excellent choice for dynamic and large-scale AI projects.

Frequently Asked Questions (FAQ) about the GeForce RTX 4090 (24 GB) GPU Graphics Card

What makes the GeForce RTX 4090 (24 GB) the best GPU for AI practitioners?

The GeForce RTX 4090 (24 GB) stands out as the best GPU for AI practitioners due to its exceptional performance, superior architecture, and massive memory capacity. This next-gen GPU is designed to handle large model training and deployment tasks efficiently. With 24 GB of GDDR6X VRAM, it offers ample space for storing large datasets and complex neural network models, ensuring faster training times and more accurate results.

How does the GeForce RTX 4090 (24 GB) compare to the H100 in terms of cloud GPU price?

While the H100 is a powerful option, the GeForce RTX 4090 (24 GB) provides a more cost-effective solution for many AI and machine learning tasks. The cloud GPU price for accessing the RTX 4090 on demand is generally lower compared to the H100. This makes it an attractive choice for AI builders and practitioners who need to balance performance with budget constraints.

Can I access the GeForce RTX 4090 (24 GB) on demand for my machine learning projects?

Yes, you can access the GeForce RTX 4090 (24 GB) on demand through various cloud service providers. These platforms offer flexible pricing models, allowing you to scale your computational resources based on your project needs. This on-demand access is particularly beneficial for AI practitioners who need powerful GPUs for short-term tasks without the commitment of purchasing hardware.

Why is the GeForce RTX 4090 (24 GB) recommended for large model training?

The GeForce RTX 4090 (24 GB) is highly recommended for large model training due to its advanced architecture and substantial memory capacity. The 24 GB of VRAM allows it to handle extensive datasets and complex algorithms, reducing the time required to train large models. Additionally, its high throughput and efficient power consumption make it an ideal choice for intensive AI and machine learning workloads.

What are the benefits of using the GeForce RTX 4090 (24 GB) for deploying and serving ML models?

The GeForce RTX 4090 (24 GB) offers numerous benefits for deploying and serving ML models. Its robust architecture ensures that models run efficiently and with low latency, which is crucial for real-time applications. The large memory capacity also enables the deployment of more complex models that require significant computational resources. Furthermore, the availability of the RTX 4090 on cloud platforms allows for scalable and flexible deployment options.

How does the RTX 4090 (24 GB) perform in benchmark tests for AI and machine learning tasks?

In benchmark tests, the GeForce RTX 4090 (24 GB) consistently outperforms many other GPUs in its class, making it a top choice for AI and machine learning tasks. Its advanced architecture and high memory bandwidth contribute to its superior performance in training and inference tasks. These benchmarks highlight its efficiency and capability in handling demanding AI workloads, making it a preferred option for AI builders.

Is the GeForce RTX 4090 (24 GB) a cost-effective alternative to the GB200 cluster for AI applications?

For many AI applications, the GeForce RTX 4090 (24 GB) can be a more cost-effective alternative to the GB200 cluster. While the GB200 cluster offers substantial computational power, the RTX 4090 provides a balance of performance and cost that can meet the needs of many AI practitioners. The cloud price for accessing the RTX 4090 is generally more affordable, making it a viable option for those looking to optimize their budget without compromising on performance.

What offers and pricing options are available for the GeForce RTX 4090 (24 GB) in the cloud?

Several cloud service providers offer the GeForce RTX 4090 (24 GB) with various pricing options to suit different needs. These include pay-as-you-go models, reserved instances, and spot instances, each providing different levels of cost savings and flexibility. By choosing the right pricing model, AI practitioners can significantly reduce their cloud GPU costs while still accessing powerful computational resources on demand.

Final Verdict on GeForce RTX 4090 (24 GB)

The GeForce RTX 4090 (24 GB) stands out as a next-gen GPU that is exceptionally well-suited for AI practitioners and machine learning enthusiasts. With its powerful architecture, it is ideal for large model training and can handle complex computations seamlessly. For those who need to train, deploy, and serve ML models, this GPU offers unparalleled performance. When compared to other options in the market, the GeForce RTX 4090 provides a compelling alternative to cloud-based solutions, especially when considering the cloud GPU price and the cost of H100 clusters. Overall, this GPU is a top contender for those seeking the best GPU for AI and machine learning applications.

Strengths

- Exceptional performance for large model training and complex computations.

- Highly efficient for AI practitioners needing to train, deploy, and serve ML models.

- Competitive alternative to cloud-based solutions, reducing dependency on GPUs on demand.

- Lower long-term costs compared to cloud GPU price and H100 clusters.

- Robust architecture makes it the best GPU for AI and machine learning tasks.

Areas of Improvement

- Initial investment cost can be high compared to cloud on demand options.

- Power consumption is significant, which may not be ideal for all setups.

- Cooling requirements are stringent, necessitating advanced cooling solutions.

- Availability can be an issue, with demand often outstripping supply.

- Requires a compatible and high-performance system to leverage its full potential.